382 KiB

This notebook was written by Ultralytics LLC, and is freely available for redistribution under the GPL-3.0 license. For more information please visit https://github.com/ultralytics/yolov5 and https://www.ultralytics.com.

Setup

Clone repo, install dependencies and check PyTorch and GPU.

!git clone https://github.com/ultralytics/yolov5 # clone repo

%cd yolov5

%pip install -qr requirements.txt # install dependencies

import torch

from IPython.display import Image, clear_output # to display images

clear_output()

print('Setup complete. Using torch %s %s' % (torch.__version__, torch.cuda.get_device_properties(0) if torch.cuda.is_available() else 'CPU'))Setup complete. Using torch 1.7.0+cu101 _CudaDeviceProperties(name='Tesla V100-SXM2-16GB', major=7, minor=0, total_memory=16130MB, multi_processor_count=80)

1. Inference

detect.py runs inference on a variety of sources, downloading models automatically from the latest YOLOv5 release.

!python detect.py --weights yolov5s.pt --img 640 --conf 0.25 --source data/images/

Image(filename='runs/detect/exp/zidane.jpg', width=600)Namespace(agnostic_nms=False, augment=False, classes=None, conf_thres=0.25, device='', img_size=640, iou_thres=0.45, save_conf=False, save_dir='runs/detect', save_txt=False, source='data/images/', update=False, view_img=False, weights=['yolov5s.pt']) Using torch 1.7.0+cu101 CUDA:0 (Tesla V100-SXM2-16GB, 16130MB) Fusing layers... Model Summary: 232 layers, 7459581 parameters, 0 gradients image 1/2 /content/yolov5/data/images/bus.jpg: 640x480 4 persons, 1 buss, 1 skateboards, Done. (0.012s) image 2/2 /content/yolov5/data/images/zidane.jpg: 384x640 2 persons, 2 ties, Done. (0.012s) Results saved to runs/detect/exp Done. (0.113s)

Results are saved to runs/detect. A full list of available inference sources:

2. Test

Test a model on COCO val or test-dev dataset to evaluate trained accuracy. Models are downloaded automatically from the latest YOLOv5 release. To show results by class use the --verbose flag. Note that pycocotools metrics may be 1-2% better than the equivalent repo metrics, as is visible below, due to slight differences in mAP computation.

COCO val2017

Download COCO val 2017 dataset (1GB - 5000 images), and test model accuracy.

# Download COCO val2017

torch.hub.download_url_to_file('https://github.com/ultralytics/yolov5/releases/download/v1.0/coco2017val.zip', 'tmp.zip')

!unzip -q tmp.zip -d ../ && rm tmp.zipHBox(children=(FloatProgress(value=0.0, max=819257867.0), HTML(value='')))

# Run YOLOv5x on COCO val2017

!python test.py --weights yolov5x.pt --data coco.yaml --img 640 --iou 0.65Namespace(augment=False, batch_size=32, conf_thres=0.001, data='./data/coco.yaml', device='', exist_ok=False, img_size=640, iou_thres=0.65, name='exp', project='runs/test', save_conf=False, save_json=True, save_txt=False, single_cls=False, task='val', verbose=False, weights=['yolov5x.pt'])

Using torch 1.7.0+cu101 CUDA:0 (Tesla V100-SXM2-16GB, 16130MB)

Downloading https://github.com/ultralytics/yolov5/releases/download/v3.1/yolov5x.pt to yolov5x.pt...

100% 170M/170M [00:05<00:00, 32.6MB/s]

Fusing layers...

Model Summary: 484 layers, 88922205 parameters, 0 gradients

Scanning labels ../coco/labels/val2017.cache (4952 found, 0 missing, 48 empty, 0 duplicate, for 5000 images): 5000it [00:00, 14785.71it/s]

Class Images Targets P R mAP@.5 mAP@.5:.95: 100% 157/157 [01:30<00:00, 1.74it/s]

all 5e+03 3.63e+04 0.409 0.754 0.672 0.484

Speed: 5.9/2.1/7.9 ms inference/NMS/total per 640x640 image at batch-size 32

Evaluating pycocotools mAP... saving runs/test/exp/yolov5x_predictions.json...

loading annotations into memory...

Done (t=0.43s)

creating index...

index created!

Loading and preparing results...

DONE (t=4.67s)

creating index...

index created!

Running per image evaluation...

Evaluate annotation type *bbox*

DONE (t=92.11s).

Accumulating evaluation results...

DONE (t=13.24s).

Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.492

Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.676

Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.534

Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.318

Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.541

Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.633

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.376

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.617

Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.670

Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.493

Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.723

Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.812

Results saved to runs/test/exp

COCO test-dev2017

Download COCO test2017 dataset (7GB - 40,000 images), to test model accuracy on test-dev set (20,000 images). Results are saved to a *.json file which can be submitted to the evaluation server at https://competitions.codalab.org/competitions/20794.

# Download COCO test-dev2017

torch.hub.download_url_to_file('https://github.com/ultralytics/yolov5/releases/download/v1.0/coco2017labels.zip', 'tmp.zip')

!unzip -q tmp.zip -d ../ && rm tmp.zip # unzip labels

!f="test2017.zip" && curl http://images.cocodataset.org/zips/$f -o $f && unzip -q $f && rm $f # 7GB, 41k images

%mv ./test2017 ./coco/images && mv ./coco ../ # move images to /coco and move /coco next to /yolov5# Run YOLOv5s on COCO test-dev2017 using --task test

!python test.py --weights yolov5s.pt --data coco.yaml --task test3. Train

Download COCO128, a small 128-image tutorial dataset, start tensorboard and train YOLOv5s from a pretrained checkpoint for 3 epochs (note actual training is typically much longer, around 300-1000 epochs, depending on your dataset).

# Download COCO128

torch.hub.download_url_to_file('https://github.com/ultralytics/yolov5/releases/download/v1.0/coco128.zip', 'tmp.zip')

!unzip -q tmp.zip -d ../ && rm tmp.zipHBox(children=(FloatProgress(value=0.0, max=22090455.0), HTML(value='')))

Train a YOLOv5s model on COCO128 with --data coco128.yaml, starting from pretrained --weights yolov5s.pt, or from randomly initialized --weights '' --cfg yolov5s.yaml. Models are downloaded automatically from the latest YOLOv5 release, and COCO, COCO128, and VOC datasets are downloaded automatically on first use.

All training results are saved to runs/train/ with incrementing run directories, i.e. runs/train/exp2, runs/train/exp3 etc.

# Tensorboard (optional)

%load_ext tensorboard

%tensorboard --logdir runs/train# Weights & Biases (optional)

%pip install -q wandb

!wandb login # use 'wandb disabled' or 'wandb enabled' to disable or enable# Train YOLOv5s on COCO128 for 3 epochs

!python train.py --img 640 --batch 16 --epochs 3 --data coco128.yaml --weights yolov5s.pt --nosave --cacheUsing torch 1.7.0+cu101 CUDA:0 (Tesla V100-SXM2-16GB, 16130MB)

Namespace(adam=False, batch_size=16, bucket='', cache_images=True, cfg='', data='./data/coco128.yaml', device='', epochs=3, evolve=False, exist_ok=False, global_rank=-1, hyp='data/hyp.scratch.yaml', image_weights=False, img_size=[640, 640], local_rank=-1, log_imgs=16, multi_scale=False, name='exp', noautoanchor=False, nosave=True, notest=False, project='runs/train', rect=False, resume=False, save_dir='runs/train/exp', single_cls=False, sync_bn=False, total_batch_size=16, weights='yolov5s.pt', workers=8, world_size=1)

Start Tensorboard with "tensorboard --logdir runs/train", view at http://localhost:6006/

2020-11-20 11:45:17.042357: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library libcudart.so.10.1

Hyperparameters {'lr0': 0.01, 'lrf': 0.2, 'momentum': 0.937, 'weight_decay': 0.0005, 'warmup_epochs': 3.0, 'warmup_momentum': 0.8, 'warmup_bias_lr': 0.1, 'box': 0.05, 'cls': 0.5, 'cls_pw': 1.0, 'obj': 1.0, 'obj_pw': 1.0, 'iou_t': 0.2, 'anchor_t': 4.0, 'fl_gamma': 0.0, 'hsv_h': 0.015, 'hsv_s': 0.7, 'hsv_v': 0.4, 'degrees': 0.0, 'translate': 0.1, 'scale': 0.5, 'shear': 0.0, 'perspective': 0.0, 'flipud': 0.0, 'fliplr': 0.5, 'mosaic': 1.0, 'mixup': 0.0}

Downloading https://github.com/ultralytics/yolov5/releases/download/v3.1/yolov5s.pt to yolov5s.pt...

100% 14.5M/14.5M [00:01<00:00, 14.8MB/s]

from n params module arguments

0 -1 1 3520 models.common.Focus [3, 32, 3]

1 -1 1 18560 models.common.Conv [32, 64, 3, 2]

2 -1 1 19904 models.common.BottleneckCSP [64, 64, 1]

3 -1 1 73984 models.common.Conv [64, 128, 3, 2]

4 -1 1 161152 models.common.BottleneckCSP [128, 128, 3]

5 -1 1 295424 models.common.Conv [128, 256, 3, 2]

6 -1 1 641792 models.common.BottleneckCSP [256, 256, 3]

7 -1 1 1180672 models.common.Conv [256, 512, 3, 2]

8 -1 1 656896 models.common.SPP [512, 512, [5, 9, 13]]

9 -1 1 1248768 models.common.BottleneckCSP [512, 512, 1, False]

10 -1 1 131584 models.common.Conv [512, 256, 1, 1]

11 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

12 [-1, 6] 1 0 models.common.Concat [1]

13 -1 1 378624 models.common.BottleneckCSP [512, 256, 1, False]

14 -1 1 33024 models.common.Conv [256, 128, 1, 1]

15 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

16 [-1, 4] 1 0 models.common.Concat [1]

17 -1 1 95104 models.common.BottleneckCSP [256, 128, 1, False]

18 -1 1 147712 models.common.Conv [128, 128, 3, 2]

19 [-1, 14] 1 0 models.common.Concat [1]

20 -1 1 313088 models.common.BottleneckCSP [256, 256, 1, False]

21 -1 1 590336 models.common.Conv [256, 256, 3, 2]

22 [-1, 10] 1 0 models.common.Concat [1]

23 -1 1 1248768 models.common.BottleneckCSP [512, 512, 1, False]

24 [17, 20, 23] 1 229245 models.yolo.Detect [80, [[10, 13, 16, 30, 33, 23], [30, 61, 62, 45, 59, 119], [116, 90, 156, 198, 373, 326]], [128, 256, 512]]

Model Summary: 283 layers, 7468157 parameters, 7468157 gradients

Transferred 370/370 items from yolov5s.pt

Optimizer groups: 62 .bias, 70 conv.weight, 59 other

Scanning images: 100% 128/128 [00:00<00:00, 5395.63it/s]

Scanning labels ../coco128/labels/train2017.cache (126 found, 0 missing, 2 empty, 0 duplicate, for 128 images): 128it [00:00, 13972.28it/s]

Caching images (0.1GB): 100% 128/128 [00:00<00:00, 173.55it/s]

Scanning labels ../coco128/labels/train2017.cache (126 found, 0 missing, 2 empty, 0 duplicate, for 128 images): 128it [00:00, 8693.98it/s]

Caching images (0.1GB): 100% 128/128 [00:00<00:00, 133.30it/s]

NumExpr defaulting to 2 threads.

Analyzing anchors... anchors/target = 4.26, Best Possible Recall (BPR) = 0.9946

Image sizes 640 train, 640 test

Using 2 dataloader workers

Logging results to runs/train/exp

Starting training for 3 epochs...

Epoch gpu_mem box obj cls total targets img_size

0/2 5.24G 0.04202 0.06745 0.01503 0.1245 194 640: 100% 8/8 [00:03<00:00, 2.01it/s]

Class Images Targets P R mAP@.5 mAP@.5:.95: 100% 8/8 [00:03<00:00, 2.40it/s]

all 128 929 0.404 0.758 0.701 0.45

Epoch gpu_mem box obj cls total targets img_size

1/2 5.12G 0.04461 0.05874 0.0169 0.1202 142 640: 100% 8/8 [00:01<00:00, 4.14it/s]

Class Images Targets P R mAP@.5 mAP@.5:.95: 100% 8/8 [00:01<00:00, 5.75it/s]

all 128 929 0.403 0.772 0.703 0.453

Epoch gpu_mem box obj cls total targets img_size

2/2 5.12G 0.04445 0.06545 0.01667 0.1266 149 640: 100% 8/8 [00:01<00:00, 4.15it/s]

Class Images Targets P R mAP@.5 mAP@.5:.95: 100% 8/8 [00:06<00:00, 1.18it/s]

all 128 929 0.395 0.767 0.702 0.452

Optimizer stripped from runs/train/exp/weights/last.pt, 15.2MB

3 epochs completed in 0.006 hours.

4. Visualize

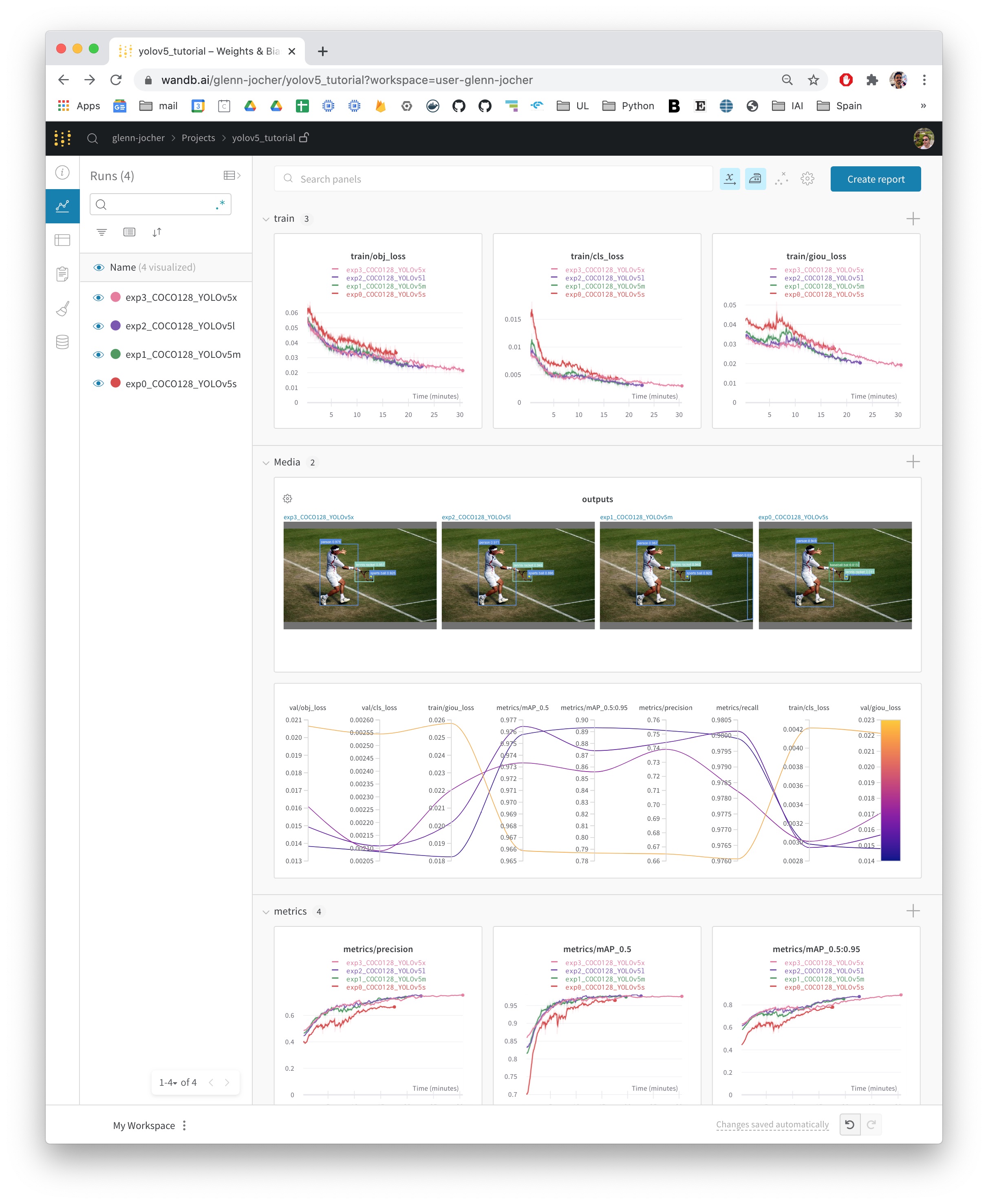

Weights & Biases Logging 🌟 NEW

Weights & Biases (W&B) is now integrated with YOLOv5 for real-time visualization and cloud logging of training runs. This allows for better run comparison and introspection, as well improved visibility and collaboration for teams. To enable W&B pip install wandb, and then train normally (you will be guided through setup on first use).

During training you will see live updates at https://wandb.ai/home, and you can create and share detailed Reports of your results. For more information see the YOLOv5 Weights & Biases Tutorial.

Local Logging

All results are logged by default to runs/train, with a new experiment directory created for each new training as runs/train/exp2, runs/train/exp3, etc. View train and test jpgs to see mosaics, labels, predictions and augmentation effects. Note a Mosaic Dataloader is used for training (shown below), a new concept developed by Ultralytics and first featured in YOLOv4.

Image(filename='runs/train/exp/train_batch0.jpg', width=800) # train batch 0 mosaics and labels

Image(filename='runs/train/exp/test_batch0_labels.jpg', width=800) # test batch 0 labels

Image(filename='runs/train/exp/test_batch0_pred.jpg', width=800) # test batch 0 predictions`train_batch0.jpg` shows train batch 0 mosaics and labels

`test_batch0_labels.jpg` shows test batch 0 labels

`test_batch0_pred.jpg` shows test batch 0 _predictions_

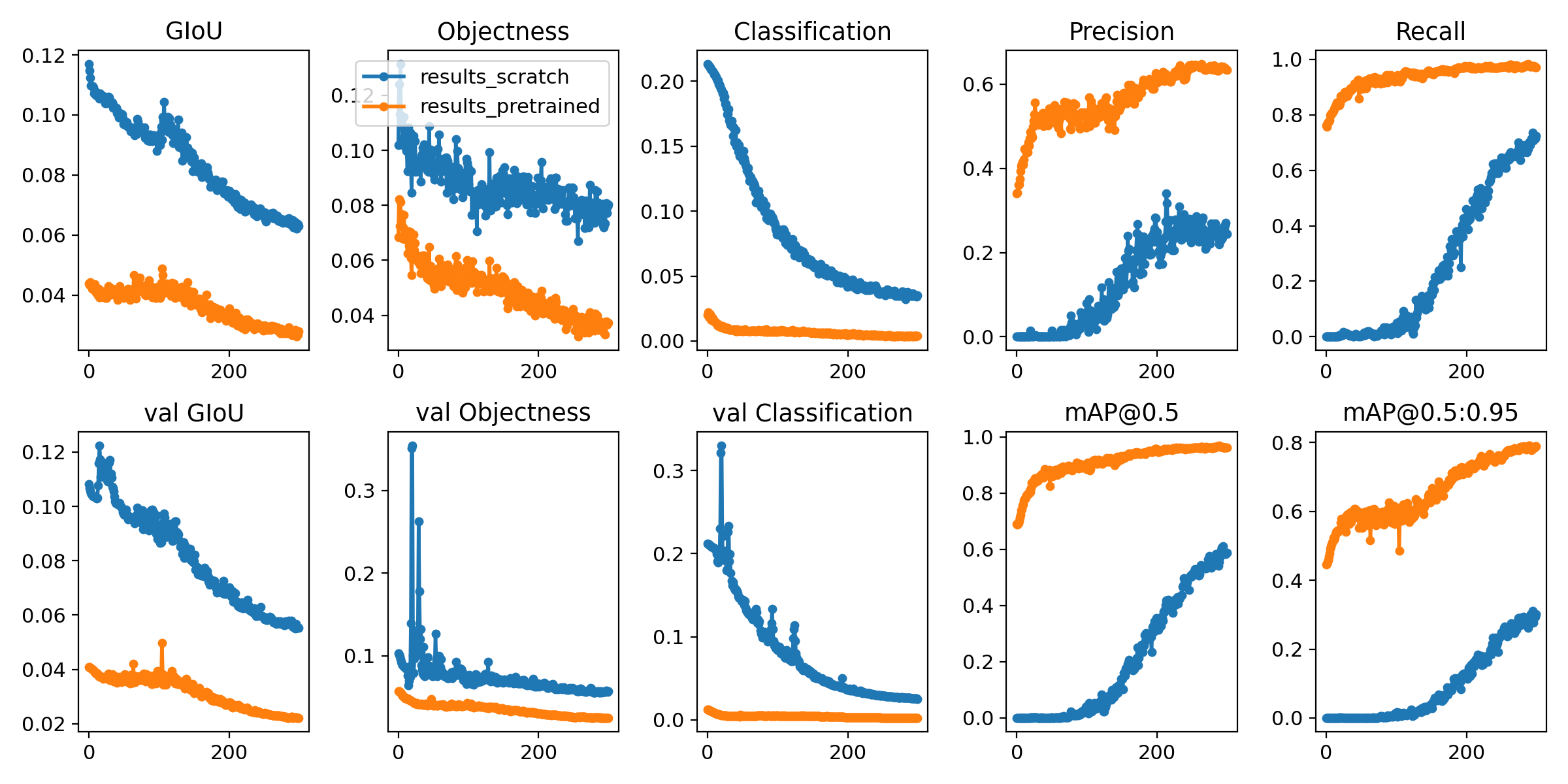

Training losses and performance metrics are also logged to Tensorboard and a custom results.txt logfile which is plotted as results.png (below) after training completes. Here we show YOLOv5s trained on COCO128 to 300 epochs, starting from scratch (blue), and from pretrained --weights yolov5s.pt (orange).

from utils.plots import plot_results

plot_results(save_dir='runs/train/exp') # plot all results*.txt as results.png

Image(filename='runs/train/exp/results.png', width=800)

Environments

YOLOv5 may be run in any of the following up-to-date verified environments (with all dependencies including CUDA/CUDNN, Python and PyTorch preinstalled):

- Google Colab Notebook with free GPU:

- Kaggle Notebook with free GPU: https://www.kaggle.com/ultralytics/yolov5

- Google Cloud Deep Learning VM. See GCP Quickstart Guide

- Docker Image https://hub.docker.com/r/ultralytics/yolov5. See Docker Quickstart Guide

Appendix

Optional extras below. Unit tests validate repo functionality and should be run on any PRs submitted.

# Re-clone repo

%cd ..

%rm -rf yolov5 && git clone https://github.com/ultralytics/yolov5

%cd yolov5# Reproduce

%%shell

for x in yolov5s yolov5m yolov5l yolov5x; do

python test.py --weights $x.pt --data coco.yaml --img 640 --conf 0.25 --iou 0.45 # speed

python test.py --weights $x.pt --data coco.yaml --img 640 --conf 0.001 --iou 0.65 # mAP

done# Unit tests

%%shell

export PYTHONPATH="$PWD" # to run *.py. files in subdirectories

rm -rf runs # remove runs/

for m in yolov5s; do # models

python train.py --weights $m.pt --epochs 3 --img 320 --device 0 # train pretrained

python train.py --weights '' --cfg $m.yaml --epochs 3 --img 320 --device 0 # train scratch

for d in 0 cpu; do # devices

python detect.py --weights $m.pt --device $d # detect official

python detect.py --weights runs/train/exp/weights/best.pt --device $d # detect custom

python test.py --weights $m.pt --device $d # test official

python test.py --weights runs/train/exp/weights/best.pt --device $d # test custom

done

python hubconf.py # hub

python models/yolo.py --cfg $m.yaml # inspect

python models/export.py --weights $m.pt --img 640 --batch 1 # export

done# Profile

from utils.torch_utils import profile

m1 = lambda x: x * torch.sigmoid(x)

m2 = torch.nn.SiLU()

profile(x=torch.randn(16, 3, 640, 640), ops=[m1, m2], n=100)# VOC

for b, m in zip([64, 48, 32, 16], ['yolov5s', 'yolov5m', 'yolov5l', 'yolov5x']): # zip(batch_size, model)

!python train.py --batch {b} --weights {m}.pt --data voc.yaml --epochs 50 --cache --img 512 --nosave --hyp hyp.finetune.yaml --project VOC --name {m}